LEARN

List of AI Essentials

Optimem

Master fundamental concepts, terminology, and key principles of modern artificial intelligence

Unverified Content

Use with discretion, this content may not be accurate or up to date.

Updated

Term LLM | Definition Large language model — AI trained on text to predict/generate words |

Term Token | Definition Smallest unit of text an AI processes (word, part of word, or character) |

Term Fine-tuning | Definition Training a pre-trained AI on specific data to specialize it |

Term Hallucination | Definition When AI confidently generates false or nonsensical information |

Term Context window | Definition Maximum amount of text an AI can remember in one conversation |

Term Embedding | Definition Converting data (like text or images) into numbers that capture meaning and relationships |

Term Inference | Definition Using a trained AI to generate outputs (vs. training it) |

Term Model weights | Definition Learned parameters that determine how an AI responds |

Term Attention mechanism | Definition How AI decides which parts of input are most relevant to each output |

Term Zero-shot learning | Definition AI performing a task without seeing any examples of it |

Term Few-shot learning | Definition AI learning a task from just a handful of examples |

Term Overfitting | Definition When AI memorizes training data instead of learning general patterns |

Term Reinforcement learning | Definition Training AI by rewarding good outputs and penalizing bad ones |

Term RLHF | Definition Reinforcement Learning from Human Feedback — training AI using human preferences |

Term Generative AI | Definition AI that creates new content (text, images, code, etc.) |

Term Supervised learning | Definition Training AI with labeled examples showing correct answers |

Term Unsupervised learning | Definition AI finding patterns in data without labeled answers |

Term Hyperparameter | Definition Setting you configure before training (learning rate, batch size, etc.) |

Term Epoch | Definition One complete pass through all training data during learning |

Term Gradient descent | Definition Method AI uses to improve by adjusting weights toward better outputs |

Term Bias (AI) | Definition Systematic errors or unfairness learned from training data |

Term Latency | Definition Time delay between sending a prompt and receiving a response |

Term API | Definition Interface letting your code send requests to an AI service |

Term System prompt | Definition Instructions defining AI's behavior and role before user input |

Term RAG | Definition Retrieval-Augmented Generation — AI fetching relevant info before answering |

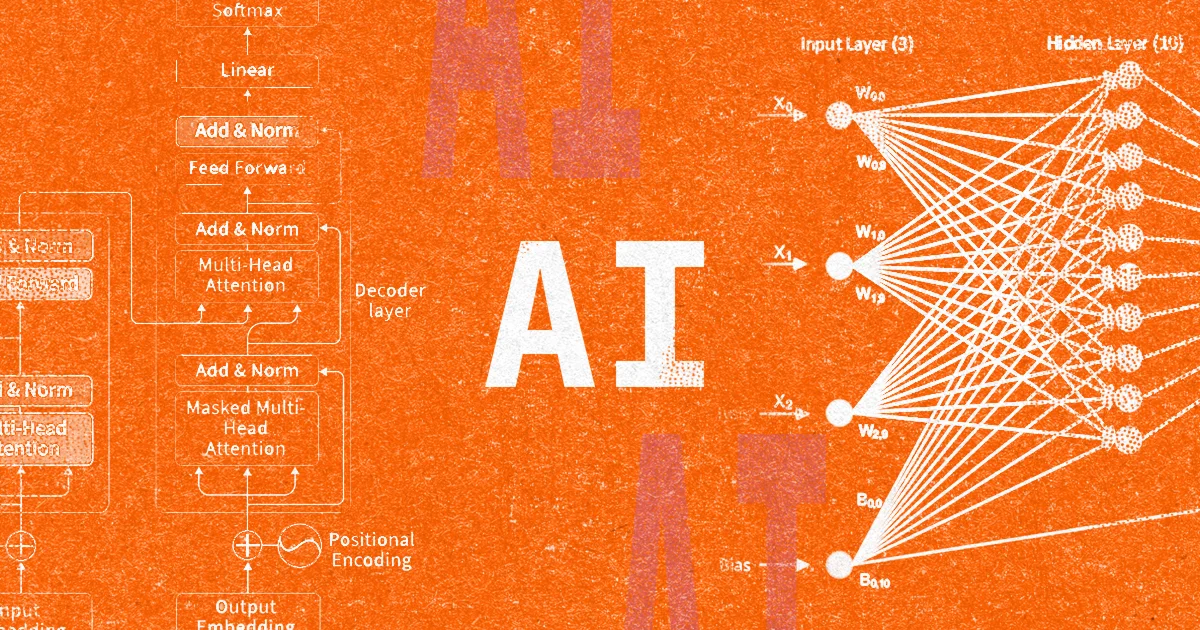

Term Transformer | Definition Neural network architecture using attention — foundation of modern LLMs |

Term Temperature | Definition Controls AI randomness: low = predictable, high = creative |

Term Prompt engineering | Definition Crafting input text to get better AI outputs |

Term Pre-training | Definition Initial training on massive data before fine-tuning for specific tasks |

Term Tokenization | Definition Breaking text into tokens the AI can process |

Term Neural network | Definition AI structure with layers of connected nodes that learn patterns |

Term Training data | Definition Examples used to teach an AI model |

Term Multimodal AI | Definition AI that handles multiple types of input (text, images, audio, etc.) |

Term Parameter count | Definition Number of learned values in a model (e.g., GPT-4 has trillions) |

Term Prompt injection | Definition Tricking AI by hiding malicious instructions in user input |

Term Top-p (nucleus sampling) | Definition Alternative to temperature — AI only considers most likely tokens adding to p% |

Term Quantization | Definition Compressing model to use less memory (8-bit vs 16-bit) with slight accuracy loss |

Term Benchmark | Definition Standardized test measuring AI performance on specific tasks (MMLU, HumanEval) |

Term Agent | Definition AI system that takes actions and uses tools to accomplish goals autonomously |

Term Vector database | Definition Storage optimized for finding similar embeddings quickly (used in RAG) |

Ready to actually remember these 40 cards?

Download the #1 distraction-free learning app for iOS and Android

5 minutes a day · 90% retention rate · Study anywhere, anytime

Chat with our community

Ask questions, get help, and share with people studying AI Essentials and other topics

Join Live Chat

Loading...